This post describes the process involved for the Kinected Portrait Series.

A custom OpenFrameworks application captured depth information using the Microsoft Kinect. The depth information was calibrated to a color image that was also captured by the kinect. Below is the raw color and depth information.

Kinect color image

Kinect Depth Data

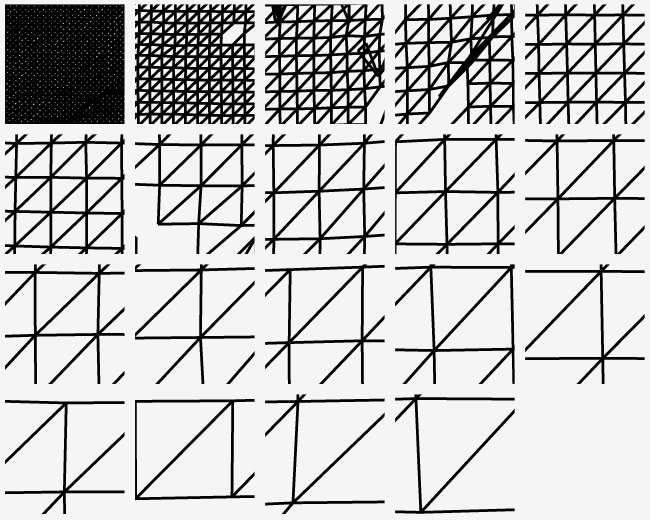

A series of meshes were generated with incremental triangle size from 1 – 19. Below demonstrates the difference in spacing between the different generated meshes, 1 being the tightest and 19 being the loosest.

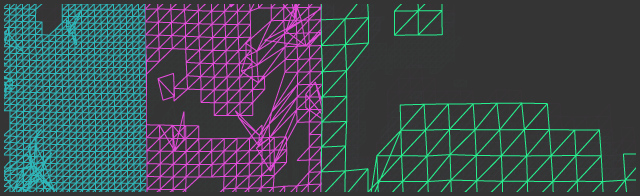

Various mesh sizes were chosen that allow smooth transitions between tighter meshes and looser meshes. Level 2, 4 and 8 were used to create a composite.

Mesh sizes 2, 4 and 8 from left to right.

The meshes were placed onto the captured color image in photoshop. The tighter meshes denoted the darker areas and the looser ones were utilized for the lighter areas, creating a ‘mesh hatching’ style. Originally the OpenFrameworks application algorithmically blended the meshes based on the lightness of the image, but the process by hand provided a much more pleasing result.

Next, the image is sketched out and watercolored, using the color image as a reference. The kinect camera quality is rather poor, making it difficult to view small details while trying to paint.

Beginning sketching with pencil and first watercolor washes.

Completed Watercolor of Kim

Once the watercolor is complete, the mesh lines printed on tracing paper is adhered to the back of the watercolor and placed on a light table for tracing. The side of a driver’s license was utilized to painstakingly trace the individual mesh lines by hand.

Completed watercolor of Victoria over light table and tracing paper.

Below is a time-lapse video showing the watercolor and tracing of the kinect mesh lines using a light table.

Post a Comment