“Mimic is a playful interactive installation that allows visitors to engage in a dialogue with a robot arm through gesture. The robot arm tracks people moving in the space, building up individual impressions of each person and reacting to their actions accordingly. These impressions are broken down into different robot feelings: trust, interest, and curiosity which in turn affect how cautious or playful the robot arm is in its responses. Mimic can interact with many people simultaneously changing its behavior and playfulness based on its feelings towards each person.” – Design I/O

To read the full description and a complete list of credits, please visit the post on design-io.com.

Made while the Minister of Interactive Art at Design I/O.

“Elements is an interactive installation where visitors embody one of four elements: Earth, Wind, Fire or Water. Through these dynamic forms, they can define, transform and sculpt the environment, using the elements to bring life and light together in a playful, ever changing space.” – Design I/O

To read the full description and a complete list of credits, please visit the post on design-io.com.

Screen saver (OSX only) available for download.

Created by Design I/O: Theo Watson, Emily Gobeille and Nick Hardeman.

Made while the Minister of Interactive Art at Design I/O.

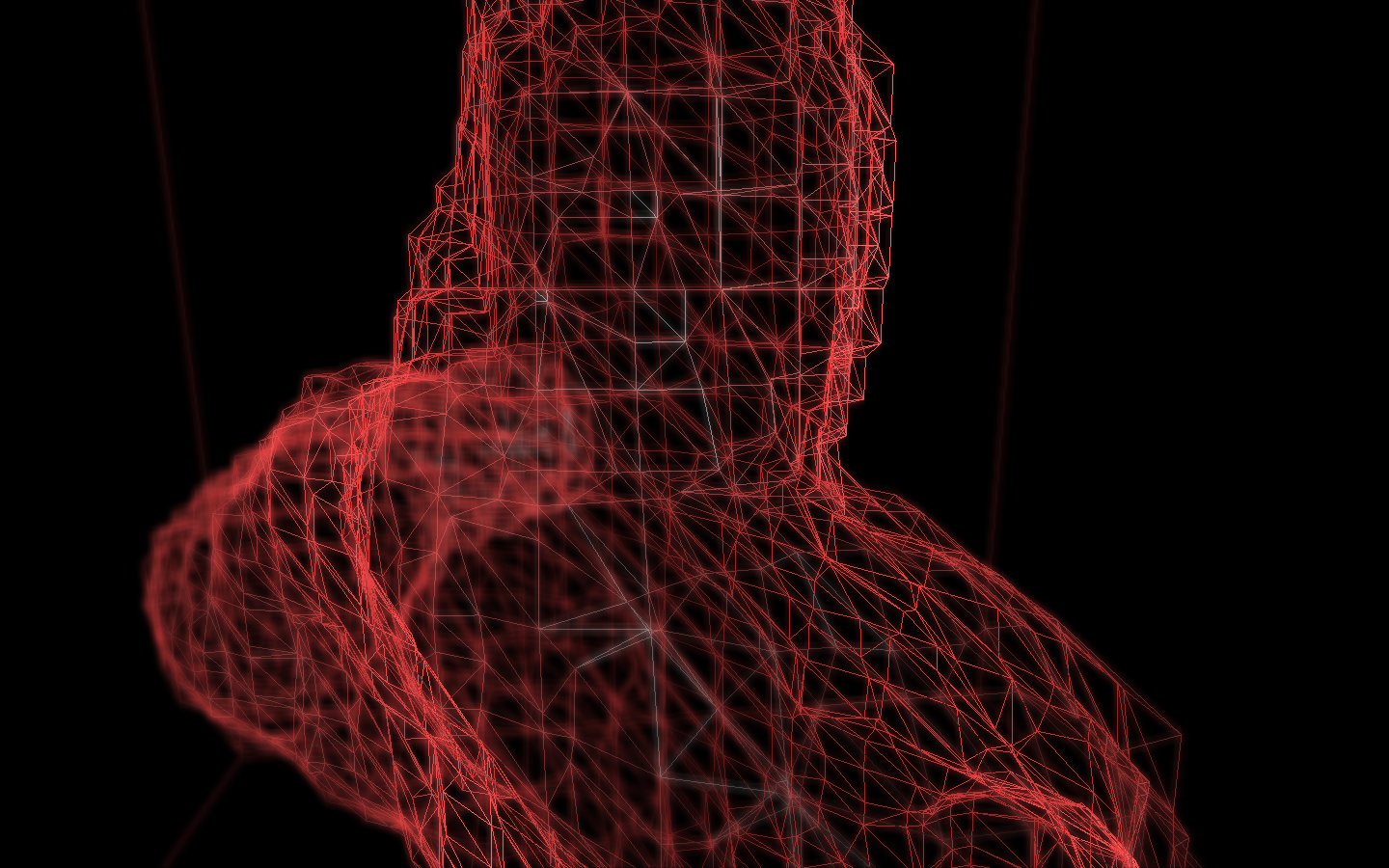

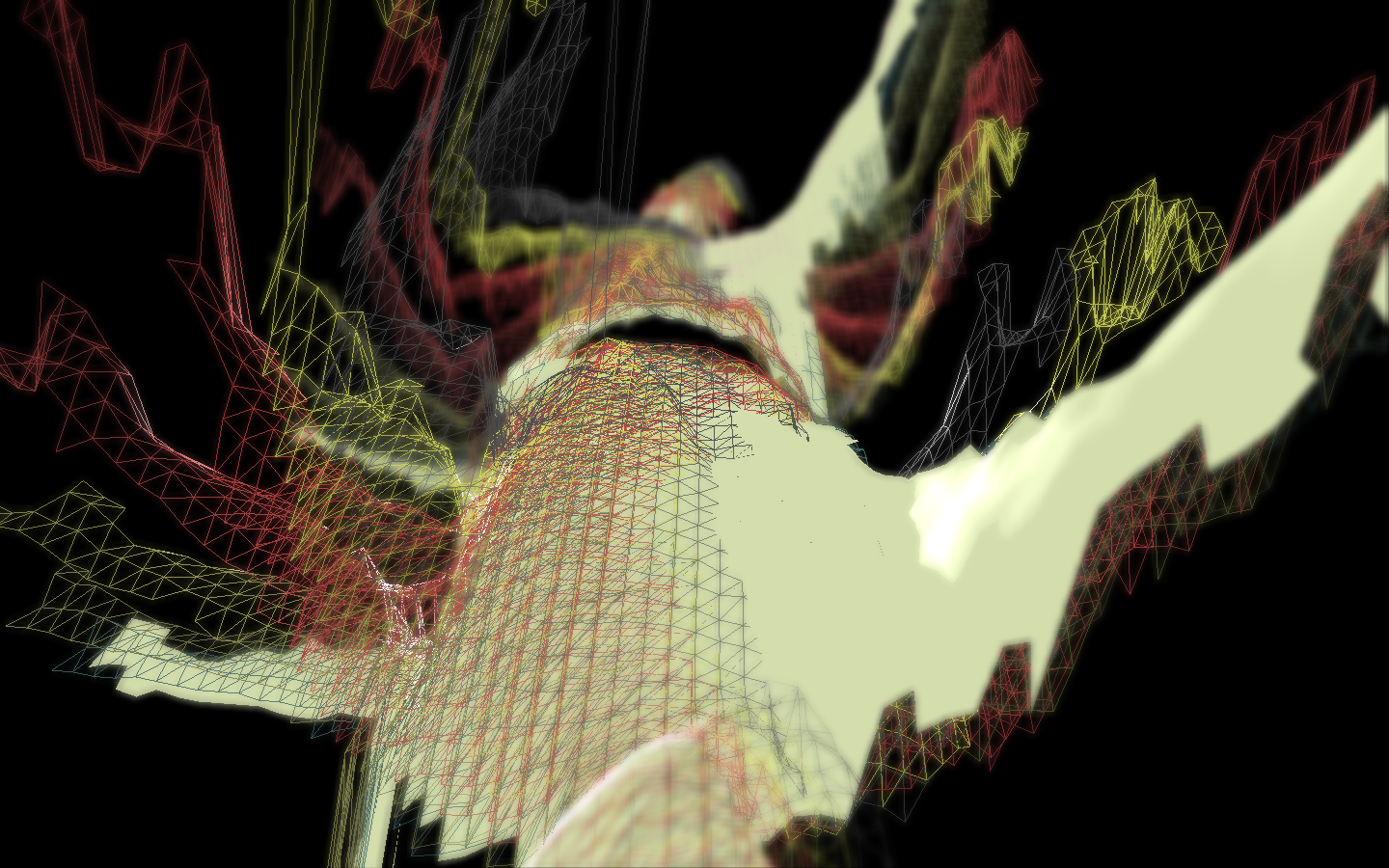

This post describes the process involved for the Kinected Portrait Series.

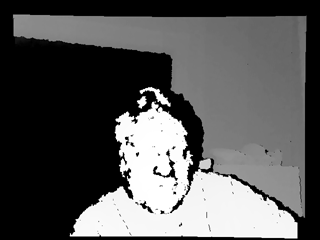

A custom OpenFrameworks application captured depth information using the Microsoft Kinect. The depth information was calibrated to a color image that was also captured by the kinect. Below is the raw color and depth information.

Kinect color image

Kinect Depth Data

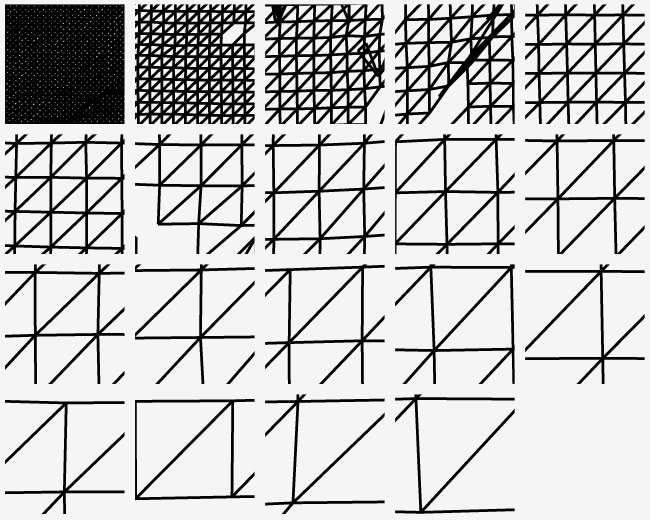

A series of meshes were generated with incremental triangle size from 1 – 19. Below demonstrates the difference in spacing between the different generated meshes, 1 being the tightest and 19 being the loosest.

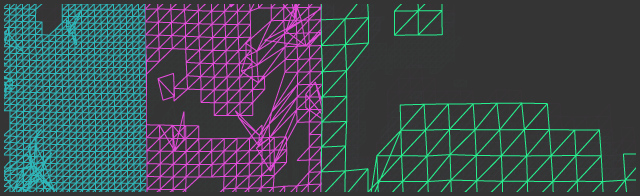

Various mesh sizes were chosen that allow smooth transitions between tighter meshes and looser meshes. Level 2, 4 and 8 were used to create a composite.

Mesh sizes 2, 4 and 8 from left to right.

The meshes were placed onto the captured color image in photoshop. The tighter meshes denoted the darker areas and the looser ones were utilized for the lighter areas, creating a ‘mesh hatching’ style. Originally the OpenFrameworks application algorithmically blended the meshes based on the lightness of the image, but the process by hand provided a much more pleasing result.

Kinected Portraits is a series that explores the relationship between computer generated depth information and human perception. The Microsoft Kinect is used to capture the depth information and the associated color image of the subject. A mesh is created from the depth information with densely packed lines representing darker areas and more loosely packed lines for lighter areas. The mesh lines are traced over the watercolor by hand to give them a delicate, intimate feel. The ‘mesh hatching’ not only provides a shading method similar to ‘cross hatching,’ but also represents the portrait conveyed by computer data. Read about the process here.

Self-Portrait – 14.75″ x 16″ – watercolor and pen on paper, 2013 Nick Hardeman

Victoria – 19″ x 26″ – watercolor and pen on paper, 2013 Nick Hardeman

Ryan – 23″ x 19″ – watercolor and pen on paper, 2013 Nick Hardeman

Kimmie Jean – 22.5″ x 27″ – watercolor and pen on paper, 2013 Nick Hardeman

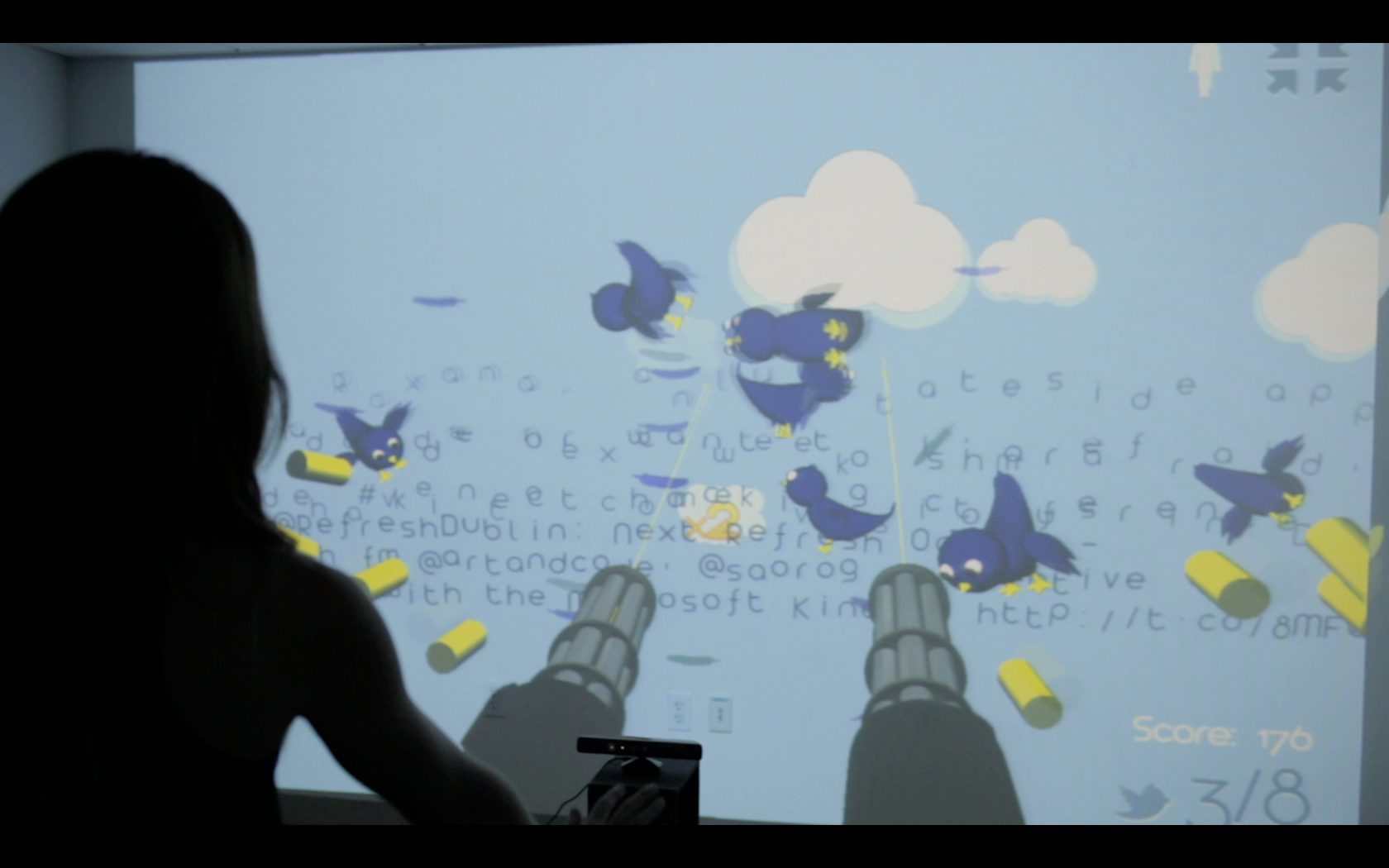

I created these sketches for the Tribeca Film Festival Interactive Day. Unfortunately I didn’t get too much documentation at the actual event. But there are a few photos below and a video of me interacting with the app. The compression kills the quality of this video, please watch in HD if you can.

Follow This! is a first person shooter game utilizing the Microsoft Kinect camera. Input a twitter search term to create birds, varying based on the loaded tweets. Once all of the tweets have been gathered, you are equipped with gatling gun arms. Aiming your arms in physical space is reflected in the game. Open your hands to fire and pulverize as many birds as possible, earning score multipliers for quick hits. You must blast an increasing minimum amount of birds to advance to the next level, if not, fail whale; game over.

Curious, having trouble, a debug screen displays the various digital images generated by the Kinect camera and provides some brief instructions / help.

Special thanks to Lauren Licherdell for Design / 3D Assets.

Thanks for all the help from the OF community!

The game uses the Kinect for user control

Users control their gatling gun arms independently by aiming their arms in physical space and fire by opening their hands.

Earn score multipliers by shooting birds in rapid succession.

When users have their hands closed, the guns do not fire.

The red rocket bird explodes into bird-seeking rockets when shot.

Shoot birds quickly to get a kill frenzy.

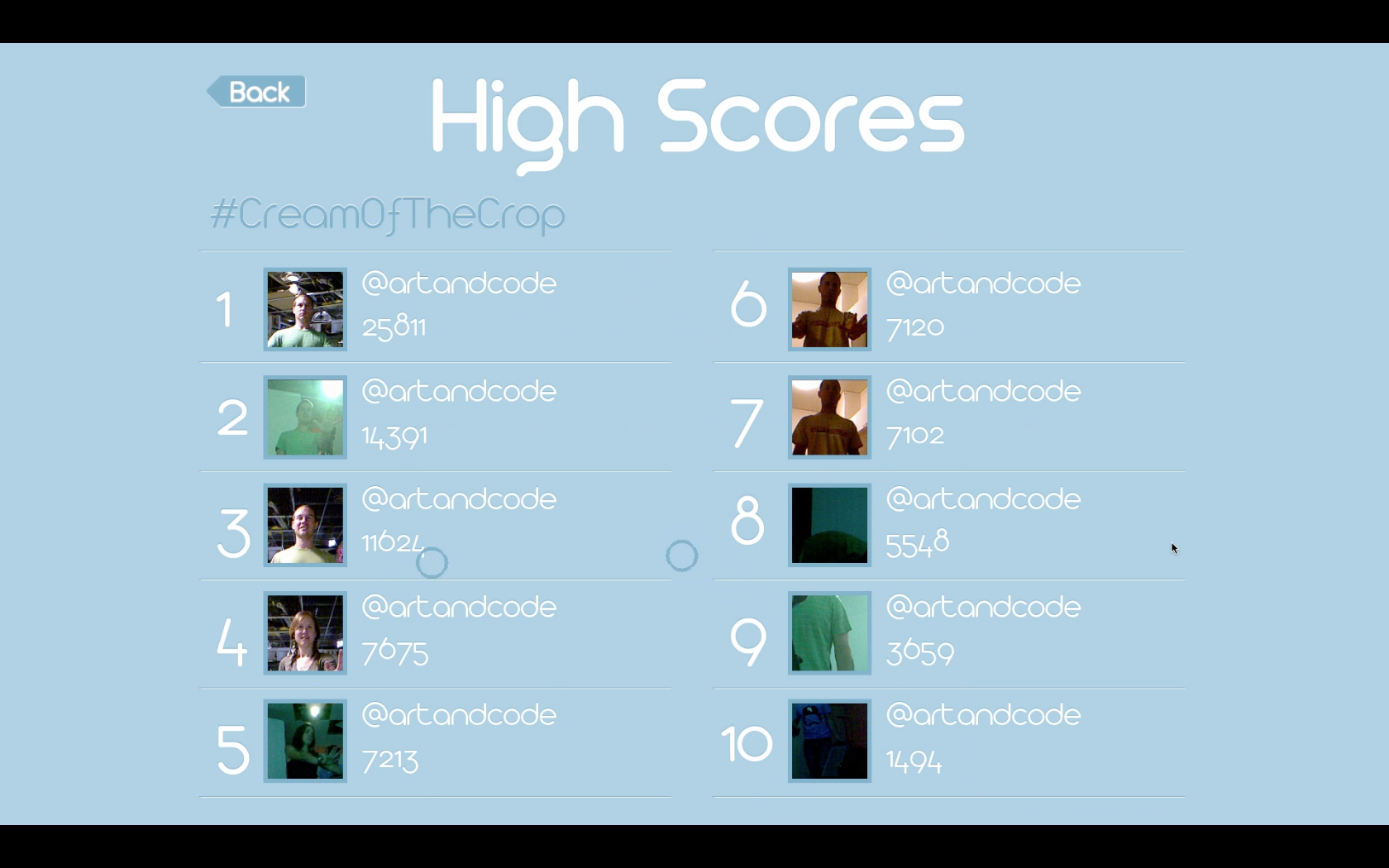

Check out the top highscores.

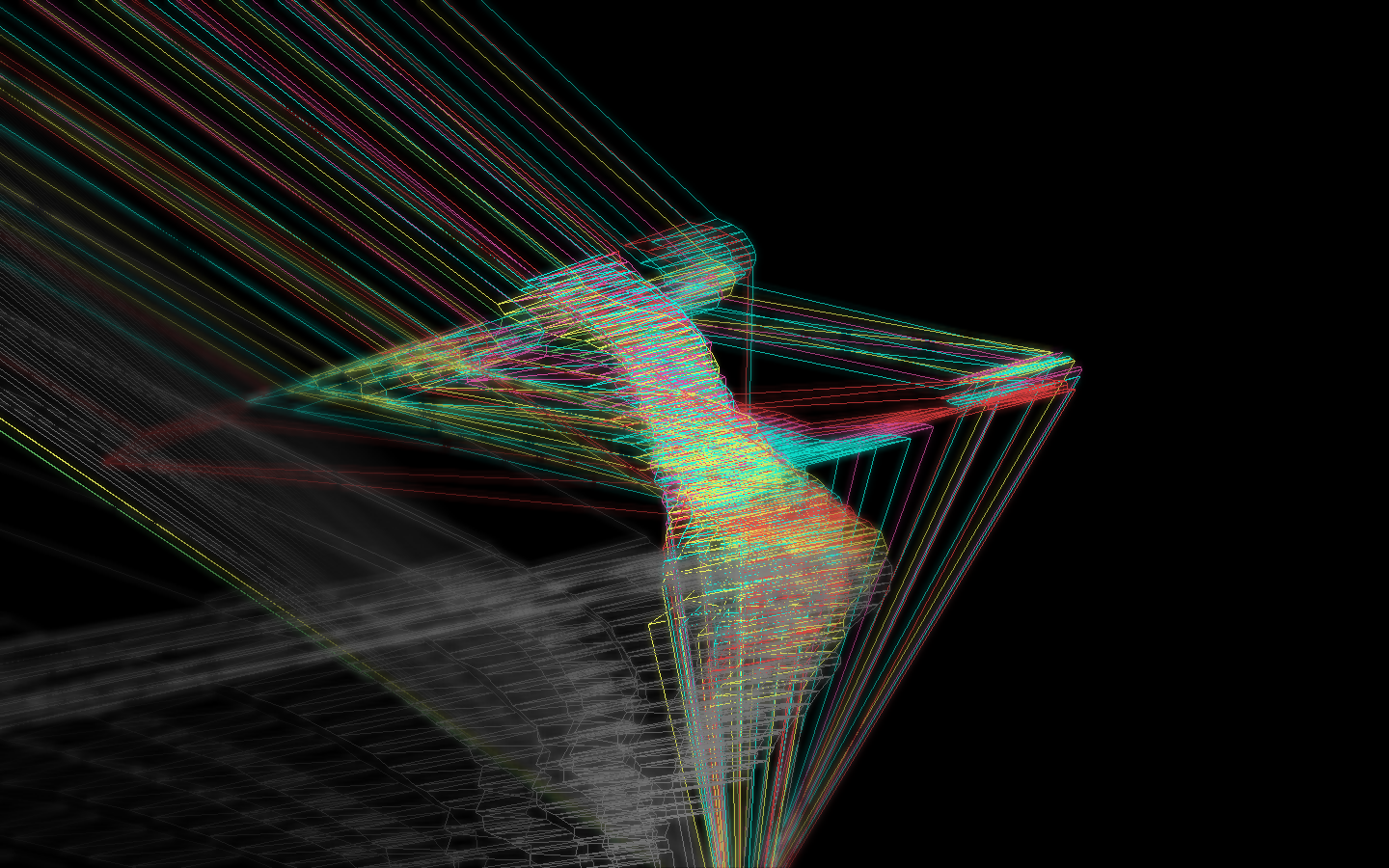

3D Vector Field with Kinect from Nick Hardeman on Vimeo.

This is an initial experiment using the kinect to generate a 3D vector field. This would not be possible without the efforts of the OF team and other people openly hacking the kinect.

Some images on flickr can be seen here.